The Deepfake Epidemic: The Alarming Scams and How to Fight Back

Deepfakes have blurred the line between truth and deception, creating a crisis in trust. With AI evolving at an alarming pace, the risks of manipulated media are growing, and the stakes have never been higher.

In February 2025, IProov tested 2,000 UK and US consumers, finding only 0.1% could fully distinguish accurate content from deepfakes. Older generation participants were more prone to fall into the trap of deepfakes.

This highlights the growing challenge of detecting AI-manipulated content and its impact on public awareness. As AI advances, so does the potential for misuse. Fortunately, AI also makes strides in detecting deepfakes, offering hope in the fight against digital deception.

Deepfake Dangers: A Digital Crisis Unfolding

The realistic forgeries of deepfakes can easily deceive people, leading to identity theft, misinformation, and fraud. Here are some of the biggest threats deepfakes present.

1. Identity Theft and Fraud: When AI Becomes a Master Impersonator

Deepfakes can make it appear that someone is saying or doing something they never did, opening doors to impersonation in financial scams and fraudulent schemes. By cloning a person's likeness or voice, malicious actors can deceive others into thinking they are interacting with the real person.

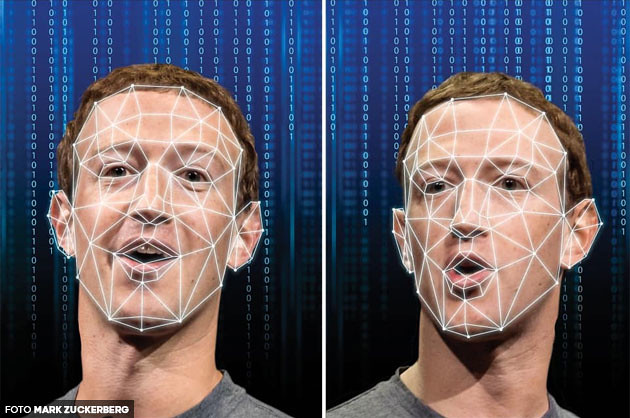

In 2019, a deepfake of Facebook CEO Mark Zuckerberg went viral, making him appear as though he was talking about increasing transparency on ads. This video was entirely fake, but it demonstrated how realistic these manipulated videos can be, blurring the line between fact and fiction.

2. Non-Consensual Explicit Content: A Nightmare for Victims

One of the most notorious uses of deepfake technology is the creation of non-consensual explicit content, mainly targeting women. Victims, including celebrities and private individuals, have seen their faces superimposed onto explicit videos without their consent.

Actress Scarlett Johansson, for instance, has been vocal about the inadequate legal protections against deepfake abuse, especially non-consensual explicit content. While acknowledging AI's creative potential, she emphasized that its misuse severely violates privacy, with current laws falling short in addressing the issue.

3. Executive Impersonation: The $25 Million AI Scam

Cybercriminals are increasingly using deepfake technology to impersonate senior executives in spear-phishing attacks. By creating AI-manipulated audio or video, they can deceive employees into transferring funds or disclosing sensitive information.

A British engineering firm, Arup, lost $25 million when scammers used a deepfake to pose as the company’s CFO, convincing an employee to transfer funds to bank accounts in Hong Kong.

4. Personal Privacy Violations

With deepfake tools becoming increasingly available, anyone’s digital footprint, photos or videos shared on social media can be exploited to create harmful, falsified media. This infringes on personal privacy and can devastate a person’s personal and professional life.

In 2021, a Pennsylvania woman was charged with cyber harassment for creating deepfake images of her daughter’s cheerleading rivals, making it appear they were drinking, smoking, and nude. She allegedly manipulated social media photos to damage their reputations and sent the doctored images to the girls, even suggesting they kill themselves.

5. Undermining Democratic Processes

Deepfakes can be weaponized in politics, producing fabricated videos of politicians saying or doing controversial things to manipulate public perception. In a time where disinformation spreads rapidly online, the impact of a well-crafted deepfake could be catastrophic, potentially influencing election outcomes or international relations.

In 2023, the Never Back Down PAC released an ad targeting Donald Trump. The ad accused Trump of criticizing Iowa Governor Kim Reynolds, but it was later confirmed that Trump’s voice in the ad was AI-generated, based on a post he made on Truth Social. The ad aired statewide in Iowa, backed by a $1 million investment.

6. Academic Integrity Challenges

Deepfake technology poses new challenges to academic integrity, allowing applicants to falsify their identities during interviews or assessments. This makes it harder for universities to verify authenticity, raising concerns about fairness and security in the admissions process as AI-driven manipulation becomes more sophisticated.

UK universities faced deepfake challenges as applicants used AI-generated videos to fake identities during automated interviews. In response, universities have enhanced verification and detection systems to prevent fraud and protect the integrity of their admissions processes.

What’s the Defense Against Deepfake Deception?

Countering deepfakes requires a multi-pronged approach to address the challenges they present. This can be done by leveraging advanced technology, raising public awareness, and implementing strong regulatory measures. We can better detect, prevent, and manage deepfake misuse, ultimately protecting digital media privacy, security, and trust.

AI Tools to Counter Deepfakes

Advanced AI and machine learning tools are essential for detecting and flagging deepfakes. These systems analyze inconsistencies in digital media to spot signs of manipulation. Digital watermarking and signatures also help verify the authenticity of content by embedding unique identifiers. The tools aid investigators in tracing the origins of deepfakes, providing critical evidence for legal action. Some of the tools are listed here.

1. Reality Defender

This versatile deepfake detection platform is designed to identify manipulated content in images, videos, audio, and text. Reality Defender has a patented multi-model approach uses AI to detect forgeries in real-time, making it suitable for enterprises, governments, and industries that deal with sensitive media. Its detection process operates without requiring watermarks or prior authentication, ensuring quick and reliable content verification.

Key Features

- Multi-Media Detection: It detects deepfakes across images, video, audio, and text, providing comprehensive coverage.

- Real-Time Detection: This feature offers fast, watermark-free analysis to authenticate real-time content.

- Flexible Integration: It is available via a web app or API, allowing seamless integration into existing workflows.

- Explainable AI: It provides precise, color-coded manipulation probabilities and detailed reports for easy understanding.

Pros

- Comprehensive Detection: The tool covers multiple media formats.

- Real-Time Results: It immediately identifies manipulated content.

- Scalable: The tool is suitable for small and large media volumes.

Cons

- Enterprise Focused: The tool is not ideal for individual users.

- Custom Pricing: There is a lack of transparency on pricing.

2. Sentinel

Democratic governments, defense agencies, and European enterprises widely use this AI-based deepfake detection platform. Sentinel allows users to upload digital media through its website or API for analysis, detecting any signs of AI-generated manipulation. The system offers detailed reports and visualizations showing where and how the media has been altered.

Key Features

- AI-Powered Detection: It analyzes digital media to detect deepfake manipulation using advanced AI algorithms.

- Visualization of Manipulation: It provides visual insights, highlighting specific areas of media that have been altered.

- API Access: The tool allows organizations to integrate Sentinel’s detection capabilities into their workflows.

Pros

- Government-Grade Security: It is trusted by leading defense and government agencies.

- Detailed Visual Reports: It offers in-depth visual breakdowns of manipulated content.

Cons

- Enterprise Focused: The tool is primarily targeted at larger organizations.

- Unavailable for Individuals: It is not ideal for casual or individual users.

3. Attestiv

This tool offers a commercial-grade deepfake detection platform suitable for individuals, influencers, and businesses. It analyzes uploaded videos or social media links for signs of deepfake manipulation. Attestiv is particularly useful in sectors requiring high integrity and security, such as banking, insurance, and media. It uses proprietary AI to score content and break down fake elements in detail.

Key Features

- Video and Social Media Analysis: It examines uploaded videos and social media links for deepfake content.

- AI-Driven Scoring: The tool provides detailed scoring and analysis of fake elements in videos.

- Future Authenticity Checks: It applies unique digital "fingerprints” to videos, enabling future verification of content authenticity.

Pros

- Free Option Available: The tool provides basic access for casual users.

- Detailed Breakdown: It offers a precise analysis of manipulated content.

- Versatile Use: Attestive is suitable for businesses and individuals alike.

Cons

- Limited Free Features: Advanced tools are only available in paid plans.

- Focus on Video: The tool primarily focuses on video and social media analysis.

Public Awareness and Media Literacy

Educating the public on identifying and evaluating digital content is key to reducing the impact of deepfakes. Media literacy initiatives encourage fact-checking, seeking reliable sources, and recognizing suspicious content.

Social media platforms should take responsibility by flagging deepfakes, verifying content, and enabling users to report potential misinformation.

Regulatory and Policy Initiatives

Strong regulatory frameworks are crucial to combat deepfake misuse. Laws should address source verification and the spread of manipulated content.

The EU’s proposed AI Act categorizes AI applications by risk level, requiring corresponding obligations like prohibitions, transparency, and oversight based on the threat posed by each category.

Technological Advancements in Deepfake Detection

AI is being used to fight back against deepfakes with detection technologies that can identify subtle markers in manipulated media.

These detection systems can analyze inconsistencies in facial movements, audio sync, or video artifacts that are difficult for deepfake algorithms to replicate perfectly. Microsoft and Facebook have all launched initiatives to develop and improve these tools, and public competitions investing $10 million have spurred innovation in this space.

Ongoing Efforts and Future Directions

The growing threat of deepfakes has led to a multi-pronged approach to combat their misuse. Legal frameworks are expanding, with countries drafting specific laws to regulate deepfake creation and distribution.

Meanwhile, social media companies are tightening their content policies, removing harmful deepfake videos that could mislead users.

On the technological front, AI-based detection tools are improving fast, giving individuals and institutions more reliable ways to detect manipulated media. However, as deepfake scams evolve, so must detection tools and regulatory responses.

Staying ahead of the problem requires ongoing collaboration between governments, tech companies, and researchers to ensure that deepfakes are used responsibly and that malicious applications are minimized.